I believe in belief. A full essay on that particular statement I will save for a future blog entry. For the moment, suffice it to say that I believe that belief itself is a critical aspect of reality — perhaps the most important part. What we believe determines how we behave, which in turn determines so much of the reality we engage. Our lives are literally shaped by the confines of our beliefs. Even the most hard core realist must concede that the universe we see is only the universe we see (the one in our head), reflected on the back of our brains as shadows on a wall, colored invariably with the palette of our own beliefs. There’s more to say about that, but not here and now. Right now I want to share some thoughts I’ve entertained recently around belief and artificial intelligence.

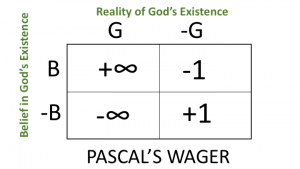

The title of this entry comes for Blaise Pascal’s famous wager for the existence of God. Pascal asserted that it is more logical to believe in God than not, regardless of God’s existence. A 2×2 matrix is often cited, providing a pretty good rationale for belief in the divine as such.

Does God Exist?

Clearly it is a simple matrix. And yes, I absolutely acknowledge the fact that a lot of smart people have taken issue with Pascal for one reason or another. But as a straw man, Pascal’s Wager has been around for a relatively long time and still has value as a thought experiment if nothing else.

With a nod to Pascal’s work, this entry would hope to provide thoughts to consider as we embark on this particular chapter of the Network Age, which we may christen as the Neural Network Age. If you’re not familiar with neural networks, learn about them. Perhaps more than any previous innovation, neural networks have the potential to radically transform everything we think we know as human beings. That is a bold statement. Such bold statements require elaboration at the very least, if not outright proof.

Enter Byron Reese — Entrepreneur, Futurist, Author, Inventor, Speaker. His most recent tome, The 4th Age, lays out the case for strong AI. Not narrow, application specific-algorithms or stochastic sieves that feedback and feedforward and sometimes exhibit actual learning from data over time. But Artificial General Intelligence. Conscious computers. Machines that think, feel, and are actual sentient beings as much as you and I are beings. Reese is an accomplished radical optimist, and I like his thinking. But, as should always be the case with radical thinkers of all flavors, lest we become a claque, I take issue with some of it. So, for the sake of discussion, casting Reese as Pangloss to our Candide, let’s consider Conscious Computers.

Per Reese, three big questions shape our beliefs, and hence our worldview. These foundational beliefs are also germane to how we view the possibilities of AGI becoming actual. Are Conscious Computers even possible? Your answer to these questions underpin your beliefs regarding AGI:

1. What is the composition of the universe? Your belief, regardless of detail, probably falls into one of two categories: monist or dualist. Either everything in the universe is physically apparent, all arising from one common source, as it were, and that is all there is, or there exists a duality — mind and brain as distinct. The former is monist, the latter is dualist. Rene Descartes was the ultimate dualist: the immaterial mind and the material body, while being ontologically distinct substances, causally interact. The greek philosopher Democritus the first declared monist which views the many different substances of the world as being of the same kind. Which are you? Monist or Dualist?

2. What are we? Your belief is one of three:

-

1. MACHINES

2. ANIMALS

3. HUMANS (something beyond machine and/or animal)

3. What is your “self” – your consciousness? You have three choices:

-

1. An illusion produced by your brain — a trick

2. An emergent property of brain activity

3. Something involving the brain, but beyond or outside the brain

Per Reese, you are more likely to believe that AGI, or Conscious Computers can and will exist if you fall neatly into the monist group. Many scientists are monists. The Scientific Method practically requires a monist bias. If you are purely dualist, you are likely very skeptical of the possibility of AGI — you simply don’t believe it can happen or that we can create it. I claim there is a middle ground — neither monist nor dualist but both. Sort of a Forrest Gump perspective — it’s both, at the same time. Like light, both particle and wave. Yes we are machines, yes we are animalsl, yes we are human. Yes my ‘self’ is an illusion, a trick of the brain, but my ‘self’ is also an emergent property of brain activity, and yes it is also separate from my currently measurable physical apparatus. I have never had a cognitive problem embracing paradox. But that’s me. That’s not everybody. And that’s not the point of this entry.

The point of this entry is how belief impacts outcome, akin to Pascal’s origial Wager.

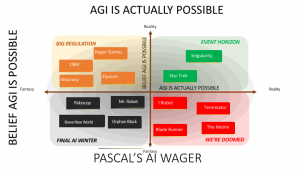

We can pretty much line up dualists with God B+ in Pascal’s breakdown and monists with God B-. The middle ground, let’s stipulate each side gets 1/2 of the subset, so we are able to create a 2×2 matrix for Pascal’s AI Wager:

ARE CONSCIOUS COMPUTERS POSSIBLE?

So the question of AGI is depicted with reality on one axis and belief on the other.

If we do not believe AGI is possible, and it is, modern mythology paints a universally dark future. We are doomed. Infinite bad. If we do not believe and it happens, then we will be surprised, like when one day Skynet comes alive and decides to eliminate the pesky threat of mankind. Or when our humanoid robots rebel violently. From Terminator to Matrix to iRobot to Blade Runner, if we do not believe Conscious Computers are possible and we are wrong, the outcome is bad. Very very bad. At least insofar as our collective imaginations are concerned as manifest by modern culture.

If we do not believe AGI is possible, and it is not possible, our futures may be less bleak but it still not good. Our mythologies suggest continued investment in technology with dehumanizing outcomes like Robocop, or Brave New World. If machines cannot think as humans then more human vessels are needed — cloning for body parts in the quest for immortality, for example, becomes the focus as we realize that uploading consciousness to digital offspring just won’t do the trick. This quadrant may also imply a slide into a repressive quagmire of indolence and dullness — Mr. Robot, or Ready Player One. This too is bad. I call this quadrant Final AI Winter, because we no longer believe in AGI and the ANI investments will have dislocated much of the humanity from us. This too is bad. Perhaps not infinite bad, but nonetheless very bad.

That’s if we do not believe. But what if we do believe?

If we believe Conscious Computers are possible, and they are not possible, our mythologies point to an alternate set of futures, none of which can be painted as terribly good. I call this quadrant Big Regulation because if we do believe we will invest as such and take precautions against the Terminator quadrant. We will rely on machines, but only to a certain point. And alas, the ability of technology to magnify the worst in us becomes the norm. People become redundant, and the displacement of human labor will be met with equal parts despair and alienation. The classic future here is Orwell’s 1984, followed closely by Hunger Games, Idiocracy, Elysium and others. So far, nothing very good here at all. So again, it’s bad.

Finally, if we do believe Conscious Computers are possible, and AGI is possible, the future may perhaps be Star Trek TNG, with Data as our Pinocchio surrogate. What is the great quest for AI after all, if not to create human in intelligence in a machine? But here too lie dragons. This is the quadrant of Kurzweil’s Singularity. The moment an intelligence emerges on our planet that is smarter that the smartest human, and that intelligence is fully capable of rapid replication and improvement of self, all bets are off. We cannot predict what will happen. We have no rules, no concepts, no game plans to fall back on. But at least, if we believe and it does happen, we will have beckoned forth those angels and girded loins as much as possible for the consequences. This quadrant is the Event Horizon. We simply cannot know what lies beyond. Is it good? Is it perhaps the realization of Omega Point Theory, effecitvely Bootstrapping God? We cannot know.

I present this as Pascal’s AI Wager. It’s not a clean nor as compelling. But I think there is merit in considering what we believe in the aggregate juxtaposed to what will actually occur.

It is interesting to note that the monists who were the effetive losers in Pascal’s Wager come out on the better side of Pascal’s AI Wager….maybe. Or maybe not. Maybe the best we can hope for is that AGI truly is a fantasy and can never actually happen. Even if ‘self’ is not a function of a dualist universe but rather an extrelemly rare and complex emergent property beyond engineering capabilities for melennium to come, at least then, humanity may continue for a while longer, darkness notwithstanding. Would you rather survive in a Brave New Idiocracy World, or roll the dice on Star Trek knowing that Terminator, The Matrix, and God knows what else might be waiting just over the edge of the world?

I quite like Byron Reese’s latest book and strongly encourage you to read it. I wish I shared his radical optimism. I have long said that if there is hope for humanity it is in software, and I still believe that. But when it comes to AGI, I am an enthusiast, very curious but a bit cautious. You have heard it said to be careful what you wish for, as you just might get it. When it comes to Conscious Computers, though a dualist by nature I am adopting the skeptical monist hat and suggest we all proceed with utmost caution — but proceed nevertheless. The truth is, from what we know and don’t know about human consciousness, in my view the probably of AGI happening any time soon is not much greater than it may have been 50 years ago. But just in case, Moore’s Law being what it is, we are well advised to exercise prudence on this journey.

………….

Remember those who died: 17 years ago today, 9/11/01 and 6 years ago today, 9/11/12

………….

Leave a Reply