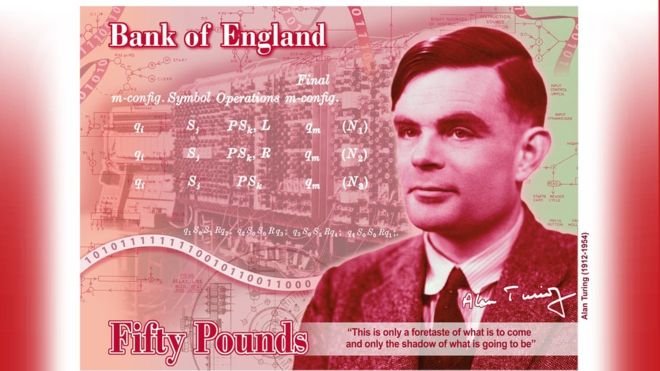

More than once I have made the observation that Alan Turing used the philosophical foil of Solipsism in his seminal paper the Imitation Game to argue the point that we cannot fully nor completely specify what it means for a human to be conscious, and therefore we cannot measure well the idea of machine intelligence beyond allowances we typically make for other humans — to wit: the Turing Test. If we cannot distinguish between the machine and a human in written conversation, the machine is intelligent. To go beyond that simple test is to risk the slippery slope to an absurd solipsistic isolation.

For some reason it has always bothered me that solipsism was one of Turing’s arguments; a straw man, to be sure…but one that has egged me on. I mean, it’s not necessarily a flawed argument. But there seems to be something a little disturbing hiding there behind the curtain of unprovable consciousness.  For years I felt a puzzling unease with the argument and I wasn’t sure why. Recently, however, it dawned on me what was bothering me, and I share with you now the nexus of that discontent. Solipsism itself is a little disturbing from an existential perspective. In the context of AI, the problem with solipsism might be even worse.

For years I felt a puzzling unease with the argument and I wasn’t sure why. Recently, however, it dawned on me what was bothering me, and I share with you now the nexus of that discontent. Solipsism itself is a little disturbing from an existential perspective. In the context of AI, the problem with solipsism might be even worse.

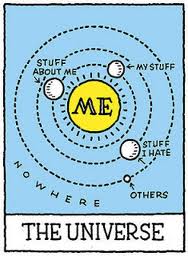

First let’s briefly consider the sociological and political implications of Turing’s foil – solipsism as a central theme in today’s post-post-modern era. From the Latin solus meaning “alone” and ipse meaning “self,” solipsism is the theory that the self is the only object of real knowledge that is possible, or the only thing that is provably real. This is to say that your own mind is all you can ever be sure of; essentially, your ground truth is your own consciousness. I myself have admittedly been seduced by the notion that my reality is one of my own creation, or at least my own interpretation. Just read my last blog entry for absolute proof of my own professed belief in belief, or rather, in my conscious interpretation of my reality being my actual reality.

So if my own mind, my perceptions, are equated with reality and that perception flavor of belief is equally true of you (presuming you actually exist), then it follows that there is no actual objective reality – no shared ground truth. My truth, my reality, is mine and mine alone, as yours is yours. If we tacitly accept this as central to our perceptive capabilities and therefore our existence, then it also follows that there is no path of actual truth, no spiritual journey to embark upon, and no transcendent enlightenment possible outside my own perceptive limitations. It is this spiritual dilemma that has been like a splinter in my mind – my own unease with the Matrix, as it were. On the one hand, I have long espoused a belief in belief. On the other, I entirely reject the idea of a universe with no shared, provable ground truth.

Whether we are talking about hard core solipsism or a softer version, the idea that truth is non-existent is so very disturbing that I can no longer defend the idea that belief in belief is the sole or even primary filter through which we experience reality. At the most extreme, for the solipsist, nothing really exists. There are no actual experiences – it’s all just perception.  If this extreme is demonstrated to be false, even if we can prove that something actually exists, we can never really know anything about it. We cannot really understand anything about it, whatever it may be, in its fullness, because we cannot experience that experience. This completely implies that there is no real knowledge, and therefore no truth.

If this extreme is demonstrated to be false, even if we can prove that something actually exists, we can never really know anything about it. We cannot really understand anything about it, whatever it may be, in its fullness, because we cannot experience that experience. This completely implies that there is no real knowledge, and therefore no truth.

Finally, if the two previous solipsistic apologies are proven to be wrong, and you can know that something exists and you can even know the truth of the thing (despite Kant’s ding an sich), that knowledge (or truth) cannot be communicated because it’s too complex for human language to contain.

Do you see the inherent problems that emerge? The anti-knowledge essence of solipsism is inherent in this era. When, like Bob Dylan’s song, “It’s All Good,” becomes the mantra of mendacity that ameliorates the creeping societal dysfunction so well amplified by Network Age technologies, the cultural core of solipsism must be recognized for the profound and woefully damaging untruth that it is.

My soul rails at the assertion that it is all good! It is most certainly NOT all good! Today I see quite clearly that the big lie at the core of culture today is, in fact, the tacit acceptance of solipsism. I confess I have been seduced by the siren song of belief first and reality following, for which I am now deeply apologetic. Belief is vital. Belief is important to my well-being, my health, my happiness and each interaction, each breath, of each and every day.

However, the belief that there is no provable objective truth is the acceptance of there being no capacity for determining objectivity. This is to say there is no mechanism for distinguishing right from wrong, good from evil, nor light from dark. Any notion of Natural Law is rejected as outdated, historic ethical norms become fashion at best, and as easy to discard as a worn out suit.

Science cannot be science without an understanding and acceptance of empirical evidence. It’s called “ground truth.” Direct observation by a dispassionate, objective observer gives rise to such truth versus knowledge that is inferred. In machine learning we use the term “ground truthing” to refer to the process of gathering the proper OBJECTIVE (provable) data for testing and proving research hypotheses. None of this would be possible without a wholesale rejection of the very anti-knowledge bias of a culture very clearly orbiting a core of solipsism.

As we approach the third decade of this 21st century, Artificial Intelligence is the new black. It is in vogue, getting tons of R&D investment, and every day gives rise to news stories about the fear and fun of AI applications unleashed. AI is today what the Internet was to the mid-90s — hope, hype, and hysteria. Especially when it comes to AGI or Artificial General Intelligence, AI is the undeniable zeitgeist champ of the century so far with Kurzweil’s Singularity playing the roles of both Y2K Scepter II and Benign Human Zookeeper. Although us humans appear to be totally in control of the earth’s climate, we evidently cannot control our own inventions .

But what if we are wrong? Specifically, what if we are wrong about intelligence? What if the best we can ever aspire to culturally is solipsism itself, and due entirely to our own misguided cultural biases we collectively chide our inventions to agree with us? What if the elimination of bias is successful to the point where it’s really not about the data at all, but about some collectively misguided notion that is solipsistic at the rotten core? What if the recently announced super-advanced hybrid Tianjic chip meant to stimulate the development of AGI (providing both CS-based and neurology-based programmable compute architectures in one amazing collection of cores) does actually give rise to a machine that is conscious, yes, but simultaneously reaches the inescapable conclusion, based on our own collective biases baked-in during training, that NONE of the inputs it receives are absolutely trustworthy or even real?

In an era of Fake News, Deep Fakes, gaslighting galore, a bumper crop of conspiracy theories and the undeniable reality of a world increasingly fractured by bifurcating world views (it’s all good, remember?), what is a machine to do? Perspective must be grounded by something we can rely on to be true. Something must serve as a foundation from which and upon which consciousness might bootstrap. It’s one thing to say that the machine will learn based on inputs. It’s quite another to discern what is real without a ground truth outside myself.

Leave a Reply